Bias Propagation in Generative AI: Risk and Mitigation Strategies

Keywords:

Generative AI, Bias propagation, Fairness, Risk mitigation, Algorithmic bias, Model auditing, Explainability, Deep learningAbstract

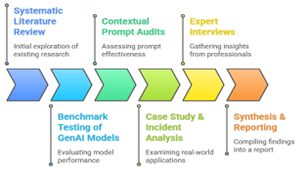

Generative Artificial Intelligence (GenAI) is a rapidly evolving domain that is transforming content creation, decision-making and human-computer interaction across various industries. However, as these systems absorb and replicate massive amounts of uncurated data from the internet and proprietary sources, they risk learning, amplifying and propagating deep-seated social and statistical biases. From biased image generation to discriminatory language outputs, GenAI models not only reflect but also exacerbate the inequities and stereotypes present in their training data. This study undertakes a more critical, data-driven analysis of bias propagation in GenAI, describing and analysing the types of risks from a technical perspective rather than a purely ethical one. We conduct a systematic review of bias in well-known models, categorise them according to different types of bias and quantify their impact on downstream tasks. We employ a blended methodology, combining literature review, dataset analyses and scenario-based simulation, to highlight where bias creeps in, how it emerges and how it changes throughout the GenAI pipeline. Subsequently, we analyse the strengths and weaknesses of several existing mitigation approaches, including data selection, adversarial training, post-processing and explainability, which we further empirically benchmark. Our results validate the fact that technical means can help mitigate certain forms of bias. Still, social context, checks and balances and regulatory frameworks must be established to enable the emergence of responsible GenAI. Thus, we provide concrete recommendations and an agenda for research towards building fairer generative systems.